Code-Mixed Text Analysis & Generation

Developing tools and methodologies for analyzing multilingual and code-switched text

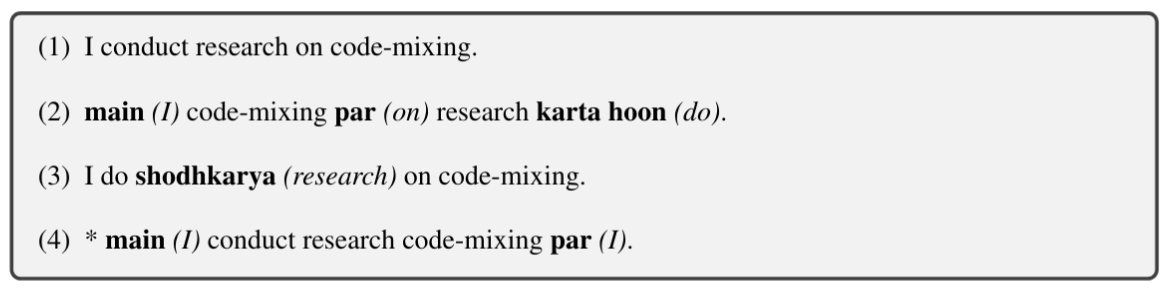

Code-mixing is the tendency of multilingual speakers to alternate between two or more languages. It happens predominantly in speech and informal text sources - like User Generated Content - comments, posts etc.

Code-mixed text is prevalent in OSNs and UGC. and even if its a small percentage, at scale it would still be a problem worth the effort. Motivations of code-mixing are socio and psycho linguistic - e.g you want to bring in informality, the word is not known in your primary language, you want to express certain emotion etc.

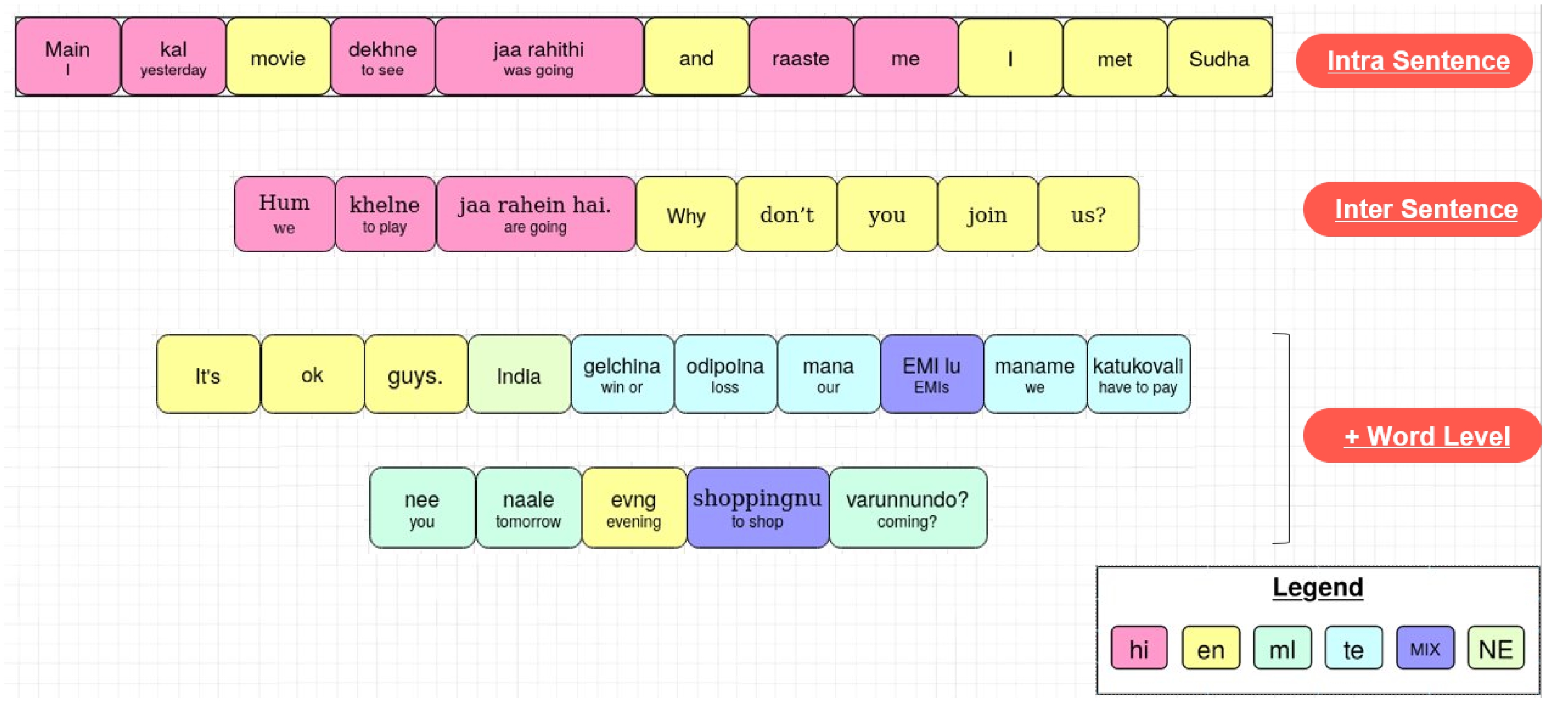

Code-mixing occurs at various levels - across sentences, within sentence, within in word.

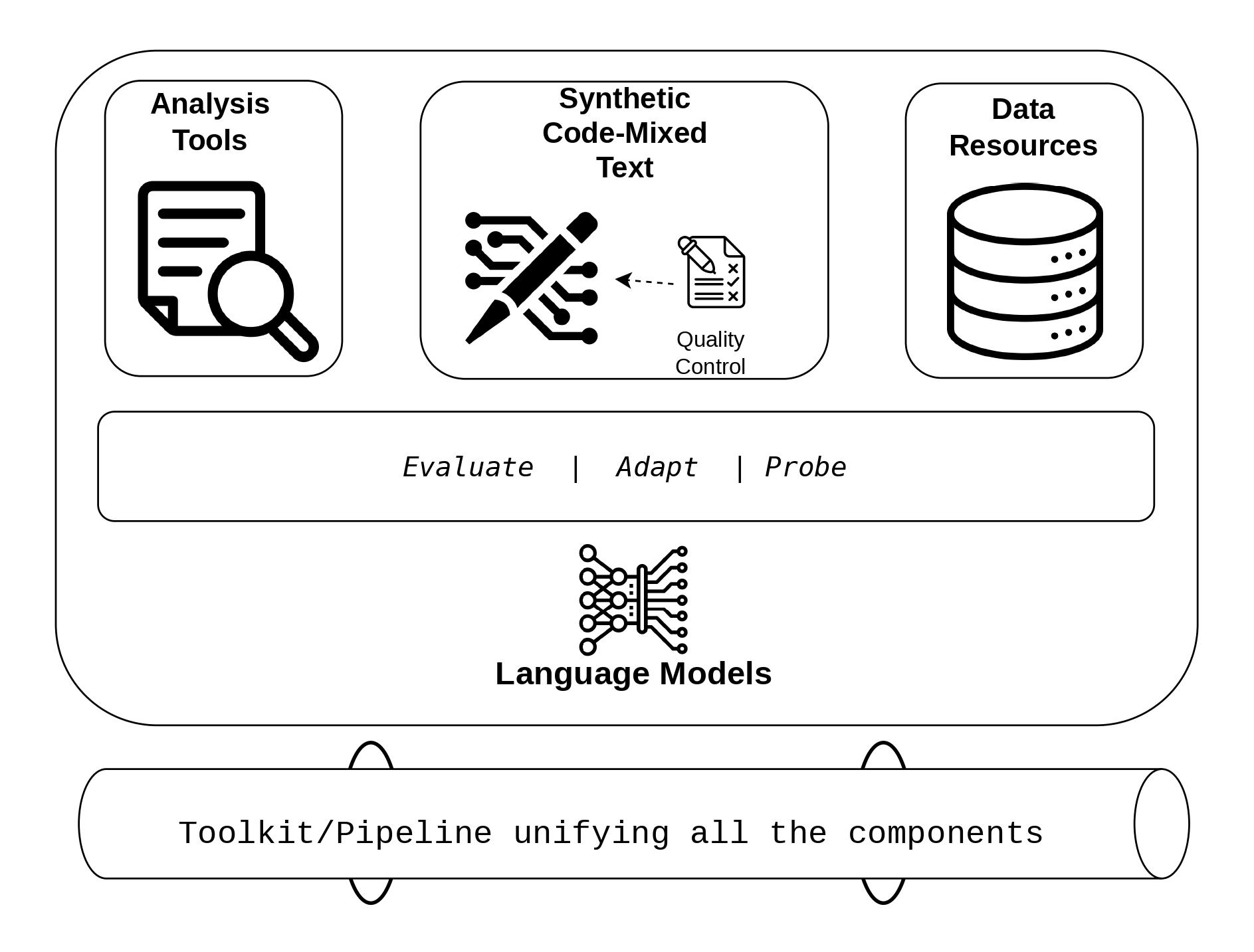

Our work on code-mixed text deals with three primary aspects:

- Analysis of code-mixed text: improving methods to linguistically analyze code-mixed text

- Improve generation of building quality control measures over synethetic code-mixed text

- Build a toolkit that combines various data resources, models, and pipelines suitable for code-mixed text

Syntactic Analysis of Code-Mixing

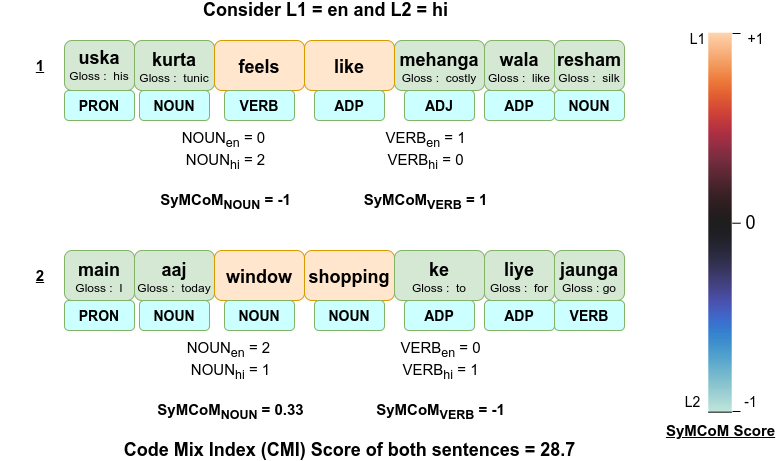

For capturing the variety of code mixing in, and across corpus, Language ID (LID) tags based measures (CMI) have been proposed. Syntactical variety/patterns of code-mixing and their relationship vis-a-vis computational model’s performance is under explored. In this work, we investigate a collection of English(en)-Hindi(hi) code-mixed datasets from a syntactic lens to propose, SyMCoM, an indicator of syntactic variety in code-mixed text, with intuitive theoretical bounds. We train SoTA en-hi PoS tagger, accuracy of 93.4%, to reliably compute PoS tags on a corpus, and demonstrate the utility of SyMCoM by applying it on various syntactical categories on a collection of datasets, and compare datasets using the measure. Please refer to our paper (Kodali et al., 2022) for more details, this paper was accepted at Findings of ACL 2022.

Unravelling Acceptability in Code-Mixed Sentences

Current computational approaches for analysing or generating code-mixed sentences do not explicitly model “naturalness” or “acceptability” of code-mixed sentences, but rely on training corpora to reflect distribution of acceptable code-mixed sentences. Modelling human judgement for the acceptability of code-mixed text can help in distinguishing natural code-mixed text and enable quality-controlled generation of code-mixed text.

To this end, we construct Cline - a dataset containing human acceptability judgements for English-Hindi (en-hi) code-mixed text. Cline is the largest of its kind with 16,642 sentences, consisting of samples sourced from two sources: synthetically generated code-mixed text and samples collected from online social media. Our analysis establishes that popular code-mixing metrics such as CMI, Number of Switch Points, Burstines, which are used to filter/curate/compare code-mixed corpora have low correlation with human acceptability judgements, underlining the necessity of our dataset. Experiments using Cline demonstrate that simple Multilayer Perceptron (MLP) models trained solely on code-mixing metrics are outperformed by fine-tuned pre-trained Multilingual Large Language Models (MLLMs). Specifically, XLM-Roberta and Bernice outperform IndicBERT across different configurations in challenging data settings. Comparison with ChatGPT’s zero and fewshot capabilities shows that MLLMs fine-tuned on larger data outperform ChatGPT, providing scope for improvement in code-mixed tasks. Zero-shot transfer from English-Hindi to English-Telugu acceptability judgments using our model checkpoints proves superior to random baselines, enabling application to other code-mixed language pairs and providing further avenues of research. We publicly release our human-annotated dataset, trained checkpoints, code-mix corpus, and code for data generation and model training. Please refer to our paper (Kodali et al., 2024) for more details, this paper is under review at a journal.

Task-Oriented Dialog Dataset for Code-mixed Languages

Efforts for Task-oriented dialogue agents efforts have predominantly concentrated on a few widely spoken languages, limiting global adoption of dialogue technology. We created a multi-domain, large-scale, and high-quality task-oriented dialogue benchmark, produced by translating the Chinese RiSAWOZ data to Hindi and code-mixed English-Hindi language. The dataset was parallelly translated to other languages (English, Frensh, Korean by other collaboratores in the work), and are part of collective X-RiSAWOZ datasets. Please refer to our paper (Moradshahi et al., 2023) for more details, this paper was accepted at Findings of ACL 2023.

Our On-going Efforts

-

Adapting Multilingual Language Models for Code-Mixed Settings: As multilingual language models are increasingly being used in code-mixed settings, we are working on adapting these models for code-mixed settings. We are working on leveraging all the available data resources - labeled and unlabeled ; monolingual and code-mixed to improve the performance of these models in code-mixed settings. Figure 6.1 presents various data availability scenarios for dealing with code-mixed tasks. Ideally, access to both labeled and unlabeled data allows for continued pre-training followed by fine-tuning. However, labeled or unlabeled data might not be available for the languages concerned, but present for another language(s) – both monolingual or code-mixed. Another extreme is where neither task-specific labeled nor unlabeled data is available. This raises the question of what the optimal strategy is for building models of code-mixing under various resource availability scenarios.We intend to leverage recent parameter-efficient and modular techniques - like Adapters, Model augmentation - to leverage the different data resources effectively to effectively improve performance of multilingual language models for code-mixed tasks. We expect this work to be completed by the Dec 2024.

-

Toolkit Development: We are working on developing a toolkit comprising of all the resources and models developed in the thesis for easy access and usage by the research community. The toolkit will provide necessary tools for standardizing pipelines for computational code-mixed research. The proposed toolkit will contains resources created as part of thesis, along with previously published resources released by the research community. The toolkit will be released as an open-source project, and will be maintained for future updates and contributions. Following will be the essential components of the toolkit:

- Data Resources

- Large scale synthetic unlabeled corpora for En-Hi, En-Kn, En-Te using GCM.

- Annnotated task specific datasets - Dialog, Acceptability for En-Hicombined

- Tools for creating/curating code-mixed data - Modified GCM tookit for ease of usage; Rule based approach for replacing tokens/phrases.

- Analysis Tools

- Tools for computing various Code-mix Metrics (e.g CMI, SyMCoM)

- Visualization tools for code-mixed sentences

- Models - Trained checkpoints, and codebase for training

- PoS Tagger

- Acceptability Classifier

- Multilingual pretrianed models domain adapted to En-Hi code-mixed text.

- Data Resources