Towards Increased Accessibility of Meme Images with the help of Rich Face Emotion Captions

CVIT, IIIT Hyderabad

Precog, IIIT Delhi

ACM Multimedia 2019

Abstract

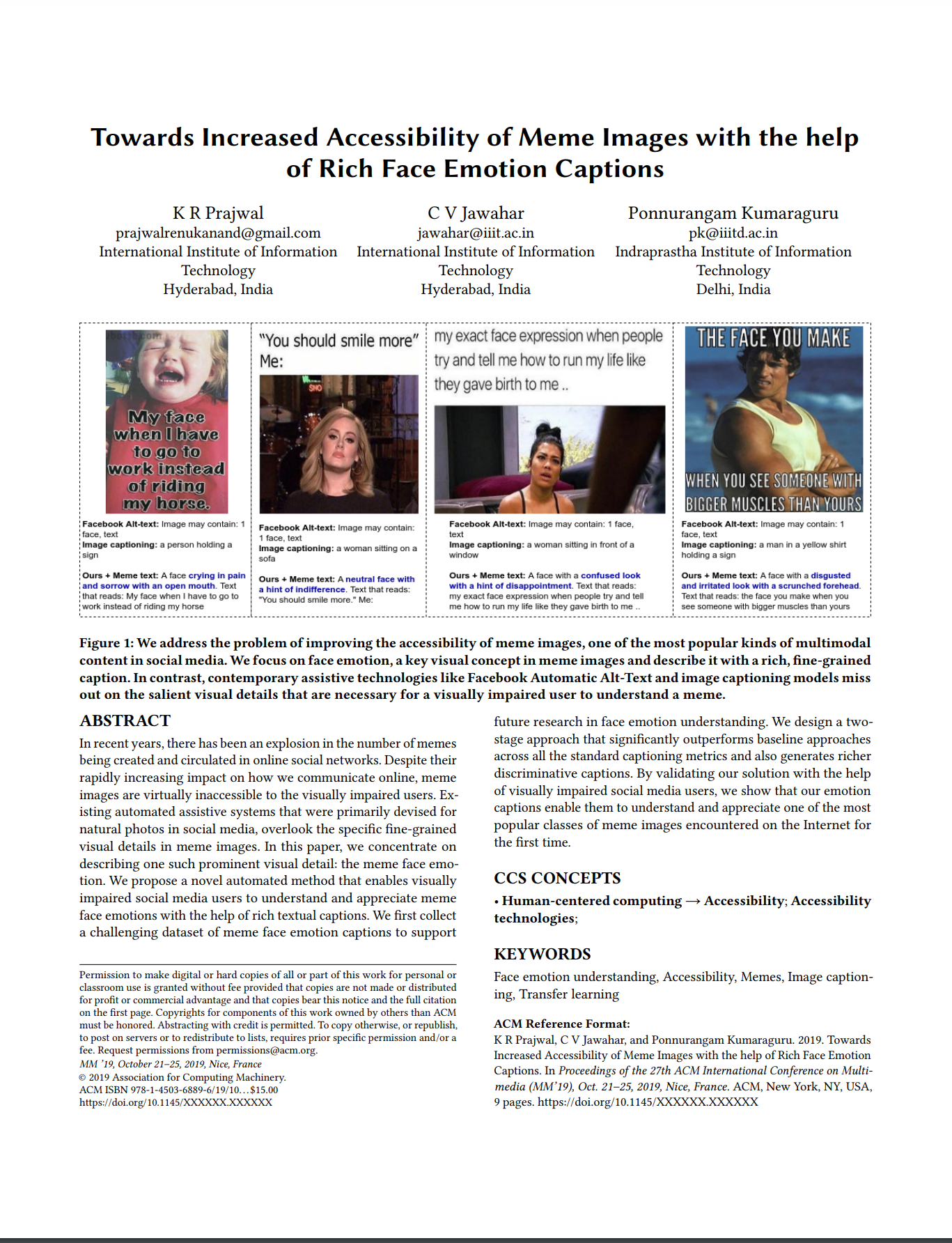

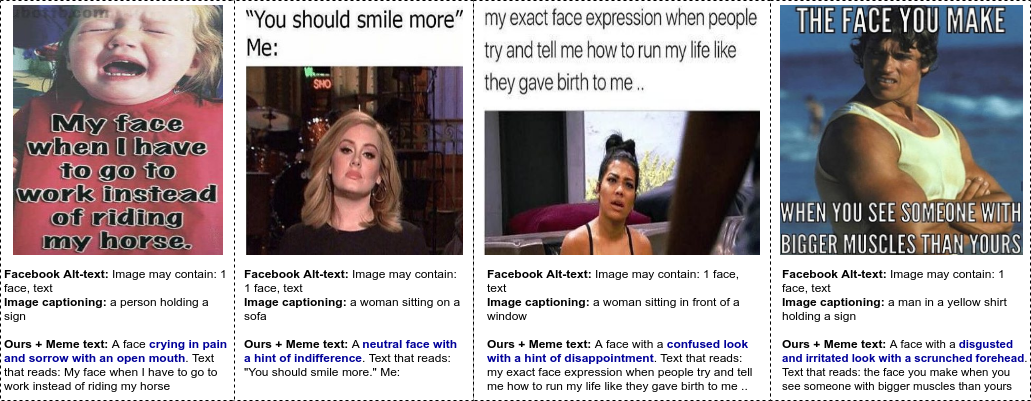

In recent years, there has been an explosion in the number of memes being created and circulated in online social networks. Despite their rapidly increasing impact on how we communicate online, meme images are virtually inaccessible to the visually impaired users. Existing automated assistive systems that were primarily devised for natural photos in social media, overlook the specific fine-grained visual details in meme images. In this paper, we concentrate on describing one such prominent visual detail: the meme face emotion. We propose a novel automated method that enables visually impaired social media users to understand and appreciate meme face emotions with the help of rich textual captions. We first collect a challenging dataset of meme face emotion captions to support future research in face emotion understanding. We design a two-stage approach that significantly outperforms baseline approaches across all the standard captioning metrics and also generates richer discriminative captions. By validating our solution with the help of visually impaired social media users, we show that our emotion captions enable them to understand and appreciate one of the most popular classes of meme images encountered on the Internet for the first time.

Paper

Data

We present a face emotion captioning dataset for about 2,000 face images from Reaction meme images. Each face is annotated with three captions to give a total of about 6,000 rich emotion face emotion captions. The dataset can be requested for research purposes using the link below.

Acknowledgements

We thank the Youth4Jobs foundation in helping us conduct a user study with the visually impaired social media users.